Trusting Trust in the Fediverse

Implied trust and how easy it is to break it

Published 2026-02-10

None of the techniques and issues described in this article, except maybe one, are particularly new.

Most have been already tested/deployed in the wild and talked about extensively.

This article is mostly a combination of my own thoughts and posts regarding various attempts at privacy

and safety

measures taken by certain projects, and why they are useless, that I collected over the last ~3 years on the Fediverse, placed into one link I can send someone.

It certainly isn't a purely technical write-up documenting the issues, and I will simplify certain details for purposes of it being accessible to more people.

You've been warned that some things are deliberately not fully explained.

This post is very long, so grab some snacks, #cofe, beer or your favorite beverage, and feel free to take breaks when reading it.

Before I start, it is important to define what I mean by Fediverse

.

In this context Fediverse

means a network of connected servers (instances) that talk in the ActivityPub protocol.

Therefore Diaspora, OStatus, Nostr and others are excluded.

Although they may have similar or exactly the same issues related with implied trust, they are out of scope here.

By implied trust

I simply mean trust a user implicitly gives to the instance administrator and the network as a whole without thinking about it. For example that a block actually blocks the user, that a private message won't be made public, and that a follow actually follows the intended user without for example leaking the action to everyone on a public timeline.

A brief high-level overview of ActivityPub

ActivityPub, usually simply shortened to AP, is an open standard maintained by the W3C for decentralized federated social media networks built on top of the Activity Streams 2, usually shortened to AS2, model and the Activity Vocabulary. Compared to Twitter, Facebook and more, there is no central authority that has control over the whole network. If you ever heard of Mastodon, and chances are very high if you are reading this, then you've heard about one of the many ActivityPub implementations. A federated network of multiple servers, usually referred to as instances, make up the Fediverse (Fedi) network. The most used comparison to other communication technology, is comparing it to e-mail. There exist many e-mail services (Google's Gmail, Microsoft's Outlook and many more), that talk in a single protocol and coexist on the same network.

A single instance has no authority over other instances and has no influence on the content on the network, besides its own local posts and its own view of network as a whole. In other words, an administrator of instance A can moderate content that is displayed to users of A, and can somewhat influence what content from instance A other instances can see. But for example it cannot delete posts from users on instance B for everyone else on the network, it can only delete them for users local to instance A. This can create a dynamic where administrators of instances might want to enforce their moderation views on other instances by trying to prevent federation to instances the administrators deem bad. A crude attempt on DRM for post that will be discussed later.

Gargron can you ban this idiot? Exactly how moderation does not work.

The protocol mostly assumes that every instance on the network plays by an implied set of rules and isn't in any way malicious. By malicious I not only mean creating spam, but also exploiting various weaknesses in the protocol, like infinite reply recursion. Implementations have to protect themselves against other malicious implementations/instances, which isn't unusual for decentralized protocols and networks. Hence the implied trust in certain aspects.

To understand some parts of what I'll be talking about throughout the rest of this article, it is somewhat important to understand how ActivityPub looks like under the hood. In order to not bore some of you, I'll gloss over how likes, repeats and reacts look like and so far only focus on posts.

A post in AP vocabulary is referred to as an Object, with the type of Note and contains multiple fields, most important of which are:

idused for identifying Objects. It is a unique identifiertypewhich in this case isNoteattributedToused for attribution of the post to a usercontentwhich as the name suggest is the content of the post you write- And lastly

ccandtowhich are used for addressing the post to certain people, or the public

Users in AP vocabulary are called Actors and post addressing (scope) is done by simply putting Actors or their followers Collections (lists of Actors that follow the Actor who made the post) into the cc and to arrays. For addressing to the public a special URI is used, https://www.w3.org/ns/activitystreams#Public, which is often shortened to as:Public when talking about it.

{

"id": "https://example.com/~mallory/note/72",

"type": "Note",

"attributedTo": "https://example.net/~mallory",

"content": "This is a note",

"published": "2015-02-10T15:04:55Z",

"to": ["https://example.org/~john/"],

"cc": [

"https://example.com/~erik/followers",

"https://www.w3.org/ns/activitystreams#Public"

]

}

Example Object 16 from ActivityPub specification

With the prerequisites covered, we can now delve into some aspects of breaking implied trust.

Post confidentiality, or lack thereof

As described above, AP allows for addressing posts to certain users or groups of users.

This is done by the mentioned cc and to arrays.

The post audience (who can see the post) is simply a combination of both arrays put together and interpreting the arrays is used to distinguish between different predefined visibilities like:

- Public - Visible on all timelines including The Whole Known Network (TWKN) for Pleroma and All servers Live feed on Mastodon

- Unlisted - Visible on Home timelines (the default one where posts from accounts the user follows appear), but not on TWKN

- Followers Only (FO)/Locked - Posts visible only to accounts that follow the user who made the post

- Direct (DMs) - Only visible to accounts mentioned in the post

For example differentiating between Public and Unlisted is simply done based on where the as:Public special URI is.

If it is in cc, the post can be considered Public, and if it is in to, it can be considered Unlisted.

If cc is empty and to has the Actor's followers Collection and the Actors mentioned in the post, the post can be considered Locked.

And lastly if cc is empty and to only lists Actors mentioned in the post without the followers Collection, the post can be considered a DM. It can be more complicated since different scopes than that are being used, but this is the gist.

Now you may be asking where I am going with this. The answer is simple. What stops an instance from meddling with the two addressing fields and therefore changing the scope of the post for its users? Nothing. Since the source code for the vast majority, if not all, of AP implementations is public and available to everyone, they can be modified to meddle with the scope. Some may call this malicious behavior, some may call this a feature. This is the core of why everything you post on the Fediverse should be considered public and viewable to everyone.

To calm some people down, it is not that simple to pull this off due to how the federation works and there are some roadblocks that prevent mass changing of scope. Firstly as I've said above there's no central authority for the network, so a modified instance with this behavior only changes the scope of the post for its own users. This mostly mitigates the impact, as users who want this changed behavior would have to know of an instance that has it and either open the post on that instance, or join that instance. Secondly, changing Unlisted to Public isn't useful at all. Only the FO and Direct scopes are interesting to attack, so what protections are in-place here? FO and Direct posts aren't necessarily federated as usual and can instead be only federated to instances that are themselves addressed in the post, ie. through the mentioned Actors or followers of the post author. The AP spec doesn't mandate this, as it doesn't even define scopes at all besides Public, but the implementations that users usually interact with have this kind of protection or very similar. Therefore in order to pull this attack off for FO posts, an already existing follow relationship between the originating and malicious instance has to be established. And for Direct posts, the malicious instance has to be explicitly mentioned. That mostly mitigates this attack, as there aren't many large instances with this malicious behavior.

Among other ways of mitigating this, defederating instances with this malicious behavior can help depending on the AP implementation. Quarantining instances (a Pleroma term) with this behavior mitigates this completely as non-public posts never get send to those instances. And signed fetch (sometimes also called authorized fetch) is irrelevant in this case, as non-public posts usually cannot be fetched by other instances and are only pushed to them. More on circumventing signed fetch where it matters later.

Another avenue for mitigating this issue might be End-to-End encryption (shortened to E2EE) similarly to how it is deployed on various instant messaging platforms. While some developments have been made with regards to E2EE, they are still in their early stages and far from being accessible to most Fediverse users. For example none of the major implementations made serious attempts at solving issues with E2EE on AP.

If you are interested in reading about E2EE, lain has a nice article about the basics of E2EE and how it could possibly be implemented in the Fediverse. Soatok also has a blog mostly about encryption with relations to the Fediverse. There is some not-so-SFW imagery on the latter. You've been warned.

Quote posts and post interaction consent

Quote posts have been for a long time, by mostly (ex-)Twitter users, considered a harassment tool since they promote what I call the Hey followers, look at this dumb idiot

behavior.

It doesn't help that in most cases the quote post no longer mentions the quoted user, so they are left in the dark and don't necessarily ever learn about the post that quoted them.

Despite that, quote posts have been on the Fediverse for a long time and implemented by numerous AP Implementations, namely Misskey, Akkoma and Pleroma, and are except in some cases a reasonably used feature.

Fast-forward to 2025, Mastodon started working on a consent-driven quote posts implementation that was different compared to every other implementation. To the surprise of nobody, it was met with loud disapproval from what is likely a loud minority of Mastodon users. It being a harassment tool being the most cited reason why Mastodon should abandon their efforts.

So what is this consent thing about?

Enter GoToSocial interactionPolicy.

Interaction consent done the ActivityPub way

First of all, what does interaction consent mean.

It means any interaction that a user can do with a post, which includes, replying to it, repeating it, or favoriting it, emoji reacting to it.

Those interactions are called an Activity in AP and the relevant ones here are:

- Create - an

Activitythat creates anObject(a post) and therefore even a reply - Announce - an

Activitythat announces theObjectit references to the followers of the announcer, ie. repeating - Like - indicates that the

Actorlikes theObjecttheActivityreferences, ie. favoriting

{

"cc": [],

"id": "https://example.com/~john/activity/89",

"to": ["https://example.com/~mallory"],

"type": "Like",

"actor": "https://example.com/~john/",

"object": "https://example.com/~mallory/note/72"

}

Example Like Activity referencing Object from earlier

Since AP never specified anything consent driven besides following users, this can be done solely based on a best-effort basis and works only in implementations that support it. That is already a big issue, which the GoToSocial documention already mentions and is therefore nothing new.

To give a simplified version of how this works, I must first explain how Activities get federated.

Every Actor needs an inbox in order to receive AP Activities.

This inbox can either be an instance-wide one, or specific to one Actor.

To give an example:

- Instance-wide -

https://example.com/inbox - Actor-specific -

https://example.com/users/user1/inbox

Activities are simply HTTP POSTed to either of the inboxes and the POST request is signed using HTTP signatures by the Actor's asymmetric key pair.

The purpose of the signature is to prevent impersonation of the user.

Without it, anyone on the network could create posts as anyone else on the network.

When a post, or any other interaction is made, the instance where it was created simply HTTP POSTs the Activity to all instances that should receive it.

(Usually to all followers of the user who created the post.)

keyId=https://example.com/user/johndoe#main-key,algorithm=rsa-sha256,headers=host date accept (request-target),signature=<TRUNCATED>

Example HTTP signature

In the above example the keyID is the URL to the Actors public part of their key pair, signature is the signature of the headers used in the request.

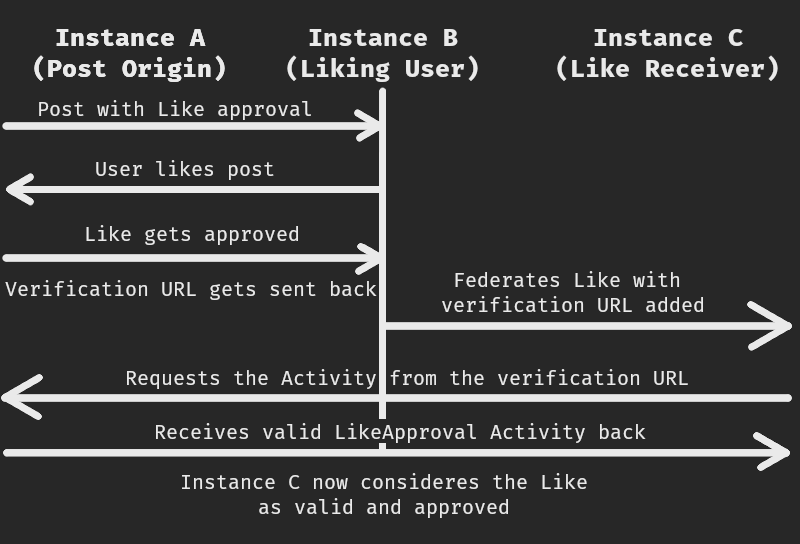

With that out of the way, here's how interactionPolicy works from a high-level.

Objects that can be interacted with, in this example a post (Object of type Note), get an interactionPolicy inserted into themselves, which defines who is allowed to do which of the three activities mentioned above.

There are two types of interaction approval (consent) an Actor can be in (automaticApproval and manualApproval).

For the purposes of this example, I'll ignore the automatic one, because as the name suggests it is automatic and therefore there is no implied trust to break.

Now let's consider a user from a remote instance wants to favorite the post and they are in the manualApproval list.

How does that work?

- The instance of the interacting user sends a normal

Like Activityonly to theinboxof theActorwho made the post that the user wants to favorite. - The receiving instance (the instance where the post being interacted with is located) then displays a "pending approvals" message for it and the user has options.

- Either accept the

Like, or reject theLike, which sends respectingAcceptorRejectActivities back to the interacting user's instance.- If the interaction was Rejected, the interaction is deleted and never federated further.

- If the interaction was Accepted, the original

Likeis federated further with a specialapprovedByfield pointing to a URI from theresultfield of the originalAccept Activity. That field serves as a pointer for other instances to where they can verify that the interaction is legitimate.

Above example can be illustrated as:

Example GoToSocial interactionPolicy flow for a Like Activity

Mastodon consent-driven quote posts are simply an extension of this extension and function in the same way.

Disregarding user consent

Since a large portion of the network does not support this AP extension and effectively disregards this consent approval, GoToSocial and likely Mastodon also implement a local filtering of interactions, so that when an unauthorized interaction happens, it is at least hidden from the user whose post got interacted with. This creates a false sense of safety for users which are unaware of the inner workings, which is most users. The specific issue this extension was supposed to solve can still occur and can happen without the affected user ever finding out about it. End result is leaving the user in the dark and with limited options of interacting back in the case of harassment or lying in replies and quote posts.

Of course this isn't the only issue with how this works and if you followed the discussions about this feature getting added to Mastodon, you probably know where I'm going with this.

Same as with the above scoping issues, it is trivial to modify AP implementations to simply remove the interactionPolicy object in the post, if they support this extension, which effectively removes the consent part completely for the specific instance with this patch.

It also is trivial to modify implementations that already support this consent approval to simply never check if the interaction was approved, when they receive an Activity that requires approval verification.

Now if those patches spread around Fedi circles (and they will) which don't like this extension, but their implementations support it.

The whole point of the extension was side-stepped and worked around.

In comparison to the scope changing, this has far more reaching implications since there's no special handling for federating likes, repeats. (Some implementations support likes and repeats scopes, but it is a rather rare feature.)

Replies aren't bound to be the same scope as the post the reply is to, and quotes can have different scopes although they would be effectively useless (at least not without the scope changing).

So instead of a local-only post scope changes, we are now in a situation where the harassed user has no idea what is happening and the instances interested in harassing cannot be stopped.

Of course nothing prevents anyone from making screenshots of the post and bypassing quote approval either.

Now you may be thinking, there must be a way to prevent this.

Right?

Well, sort of.

We've reached the point of defederating instances deemed bad and trying to intentionally prevent them from seeing posts when they are defederated.

Enter signed fetch and signature spoofing.

How does fetching posts work?

Once again a brief overview of the fetching part of federation is needed.

There are two modes of federation.

ActivityPub is mainly a push protocol, meaning that when an Actor makes a post, that post is pushed to other instances.

But this creates an issue when a reply to a post gets federated to an instance which does not know about the parent.

AP solves this issue by adding an inReplyTo field into replies, which contain the URI of the parent (the post being replied to).

That worked fine for some time until the ability to fetch any post by simply knowing its URI and sending an HTTP GET request was deemed bad.

To fix this issue

, the same way used to prevent impersonation of users when federating posts was used here.

Any fetch for an Object now had to be signed with the instance's asymmetric key pair, to prove that it is the instance asking for the Object and not some John Doe.

If an instance was configured to enforce this authorized

way of fetching Objects, it would simply refuse to respond with the Object if it wasn't properly signed and the signature didn't match the instance's key pair.

And now since an instance has to identify itself when fetching an Object, not only can a defederated instance not receive the posts with the usual push, but they also cannot fetch the post (depending on implementation used).

We've entered the era of deliberately breaking federation and threads for users on instances deemed bad.

If you've seen a thread where the post is seemingly a reply with mentions, but the rest of the thread does not exist, or replies from users on some instance are missing in the thread.

You've seen this feature

in action and working as designed.

keyId=https://example.com/instance-actor#main-key,algorithm=rsa-sha256,headers=host date accept (request-target),signature=<REMOVED>

Example HTTP signature using instance's Service Actor

To remind ourselves, this is how an HTTP signature looks like when used on the Fediverse.

It is the same as in the previous example with one exception.

Instead of using the user's key in the keyId like when federating posts for example, the implementation used its own so-called Service Actor and its related key.

Now can we break this scheme and unbreak our broken threads? We can and in two vastly different ways.

Am I who I say I am

Some of you might already see an issue with this scheme.

A signature check that was originally used to prove ownership of a key and prevent impersonation is now a simple does this signature match the

.

Why can't we say that we are someone else?

All we need after all is the key pair and we can impersonate ourselves for someone else.

So that's how this Actor it referencesfeature

got originally side-stepped.

An administrator of a defederated instance created a donor instance, extracted the key pair from it and made their defederated instance use that key pair for signing fetches.

The instances that defederated the administrator's instance saw a fetch with a signature, looked up the Actor it referenced (if they didn't already know about it), saw that it is a valid signature and responded with the Object.

The defederated administator got the proper Object and the instances that defederated him were none the wiser.

This is nothing new and has been used for years now.

Attempting to mitigate impersonation

There are some ways one can try to detect impersonation, but they have their own limits.

- Checking whether IP of a fetch request matches the resolved IP for the domain in the signature.

- This is largely undoable as instances can be behind Cloudflare and the IP of an instance is different than what is in DNS records. I'll show you how to bypass this later.

- Checking for new domains that federated.

- This can create many false flags as someone might have created a new instance and hasn't posted anything, but they are a legitimate administrator. Also slipping up once makes this mitigation worthless.

- Banning IPs of defederated instances directly as well as defederating normally.

- Largely a good mitigation unless the defederated instance has a list of instances that block them from logs and sends traffic to them through a different IP. Unlikely, but still something that might exist, although I'll bypass this mitigation below. Also instances behind Cloudflare would stop working at random since Cloudflare does not give unique IPs to customers and instead gives them IPs from a large pool.

- Checking for whether the User-Agent of an HTTP request has a defederated instance domain in it

- Also a mostly good mitigation until the defederated instance stops including its domain in the User-Agent. Although this makes the requests stand out more (there are only GET requests to

Objectsand no or almost no POST requests since little to no interaction with the posts happens), the likelihood of blending in with other requests especially on larger instance is high. I'll also bypass this mitigation below.

- Also a mostly good mitigation until the defederated instance stops including its domain in the User-Agent. Although this makes the requests stand out more (there are only GET requests to

- Forcing an impersonating instance to reveal the impersonation domain. This can be done via search on the defederated instance or by simply briefly disabling the defederation, mentioning someone from that instance in a post with the usual Misskey-fork

$INSTANCE$host$trick which on-the-fly inserts the domain of an instance into the post.- Easily mitigated by the defederated instance by rejecting/modifying posts which have the impersonation domain.

- more that I didn't think of yet...

Hidden Fetcher - Bypassing impersonation mitigations by not impersonating someone

This is a newer approach to bypassing signed fetch that I have not seen discussed on Fedi, besides me mentioning it once or twice over the last year or two. Nor have I seen someone try this. It is a logical extension of impersonation since it already requires a donor instance. So why not use the donor instance to fetch the Objects for the defederated instance instead.

All that is required is a modified AP implementation (Hidden Fetcher), with a special endpoint that fetches an Object, returns it, and another modified AP implementation that uses it to fetch Objects from instances it has been defederated by, based on some heuristics.

Not as easy as replacing the used key pair for fetches, but it shouldn't be that hard to implement.

Of course there can be added protections, so that only a whitelisted instance can use the special endpoint. There can also be random delay added to requests made by the Hidden Fetcher, so that they can't be easily correlated with the failed one made by the defederated instance.

Alternatively, the defederated instance can store a list of instances it has been defederated by and use the special endpoint directly for them and not only after it encountered an error.

There are many other ways to optimize this that I won't list, because this article would end up much longer than it already is.

And it already is very long.

So what are the options for mitigating this signed fetch defederation bypass? I'm not really sure. All of the mitigations listed above, except checking for new instances, have been bypassed or would not apply in this case. One can play the endless cat and mouse game of vetting new instances, but the Hidden Fetcher can be dressed as anything that uses ActivityPub. A WordPress site with the ActivityPub plugin, a blog using Ghost, a GoToSocial instance that has viewing profiles and posts disabled from the web frontend without authentication,... It can be made into anything to avoid detection, just like the donor instance in the case of normal impersonation.

Closing thoughts

Now after reading about three main issues I have with new-ish safety

mechanisms that got added on top of ActivityPub.

Hopefully I've made my point at least somewhat clear and why I sometimes refer to them on Fedi as security theater

.

They seem good at first glance, but once someone with nefarious thoughts starts thinking about them more deeply, the glaring issues start to show up.

In the end the seemingly worst mishandling of implied trust in ActivityPub, changing scope of messages, turned out to be a rather mild issue compared to signed fetch bypassing and harassment that is intentionally hidden from the victim, leaving them clueless about the fact it even happened.

Especially in the last case, a simple warning of unauthorized interactions made by an AP implementation that should support consent-driven interactions could be useful.

Hopefully I didn't spook you, dear reader, about how the trust possibly you and many others put in the Fediverse and the protocol behind it, isn't as strong as you thought. And how easily things that sometimes shouldn't have been allowed, can be done.

If you've come here, to the end, of this long article without a break.

You certainly deserve one now.

Grab some #cofe or your favorite beverage and take a break.

Thank you for reading all the way.

You can comment on this article on the Fediverse: https://fluffytail.org/objects/b3b84a83-60fb-4ad8-aee2-44cb3d557cd2

PS: If the title seems familiar, that's because it probably is. It is based on Ken Thompson's ACM Turing Award paper named Reflections on Trust Trust.